okay at this point I should probably make a whole-ass perplexity post because this is the third time I'm featuring them in stubsack but 404media found yet more dirt

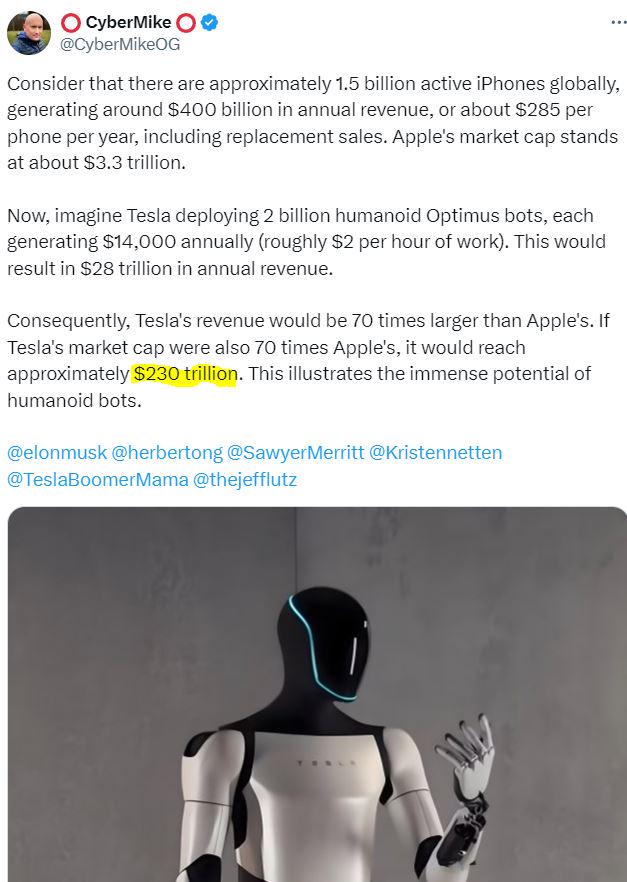

... which included creating a series of fake accounts and AI-generated research proposals to scrape Twitter, as CEO Aravind Srinivas recently explained on the Lex Fridman podcast

According to Srinivas, all he and his cofounders Denis Yarats and Johnny Ho wanted to do was build cool products with large language models, back when it was unclear how that technology would create value

tell me again how lies and misrepresentation aren't foundational parts of the business model, I think I missed it