this post was submitted on 14 May 2025

226 points (97.9% liked)

linuxmemes

24895 readers

830 users here now

Hint: :q!

Sister communities:

Community rules (click to expand)

1. Follow the site-wide rules

- Instance-wide TOS: https://legal.lemmy.world/tos/

- Lemmy code of conduct: https://join-lemmy.org/docs/code_of_conduct.html

2. Be civil

- Understand the difference between a joke and an insult.

- Do not harrass or attack users for any reason. This includes using blanket terms, like "every user of thing".

- Don't get baited into back-and-forth insults. We are not animals.

- Leave remarks of "peasantry" to the PCMR community. If you dislike an OS/service/application, attack the thing you dislike, not the individuals who use it. Some people may not have a choice.

- Bigotry will not be tolerated.

3. Post Linux-related content

- Including Unix and BSD.

- Non-Linux content is acceptable as long as it makes a reference to Linux. For example, the poorly made mockery of

sudoin Windows. - No porn, no politics, no trolling or ragebaiting.

4. No recent reposts

- Everybody uses Arch btw, can't quit Vim, <loves/tolerates/hates> systemd, and wants to interject for a moment. You can stop now.

5. 🇬🇧 Language/язык/Sprache

- This is primarily an English-speaking community. 🇬🇧🇦🇺🇺🇸

- Comments written in other languages are allowed.

- The substance of a post should be comprehensible for people who only speak English.

- Titles and post bodies written in other languages will be allowed, but only as long as the above rule is observed.

6. (NEW!) Regarding public figures

We all have our opinions, and certain public figures can be divisive. Keep in mind that this is a community for memes and light-hearted fun, not for airing grievances or leveling accusations. - Keep discussions polite and free of disparagement.

- We are never in possession of all of the facts. Defamatory comments will not be tolerated.

- Discussions that get too heated will be locked and offending comments removed.

Please report posts and comments that break these rules!

Important: never execute code or follow advice that you don't understand or can't verify, especially here. The word of the day is credibility. This is a meme community -- even the most helpful comments might just be shitposts that can damage your system. Be aware, be smart, don't remove France.

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

Seems to only support cpu or cuda, not AMD sadly.

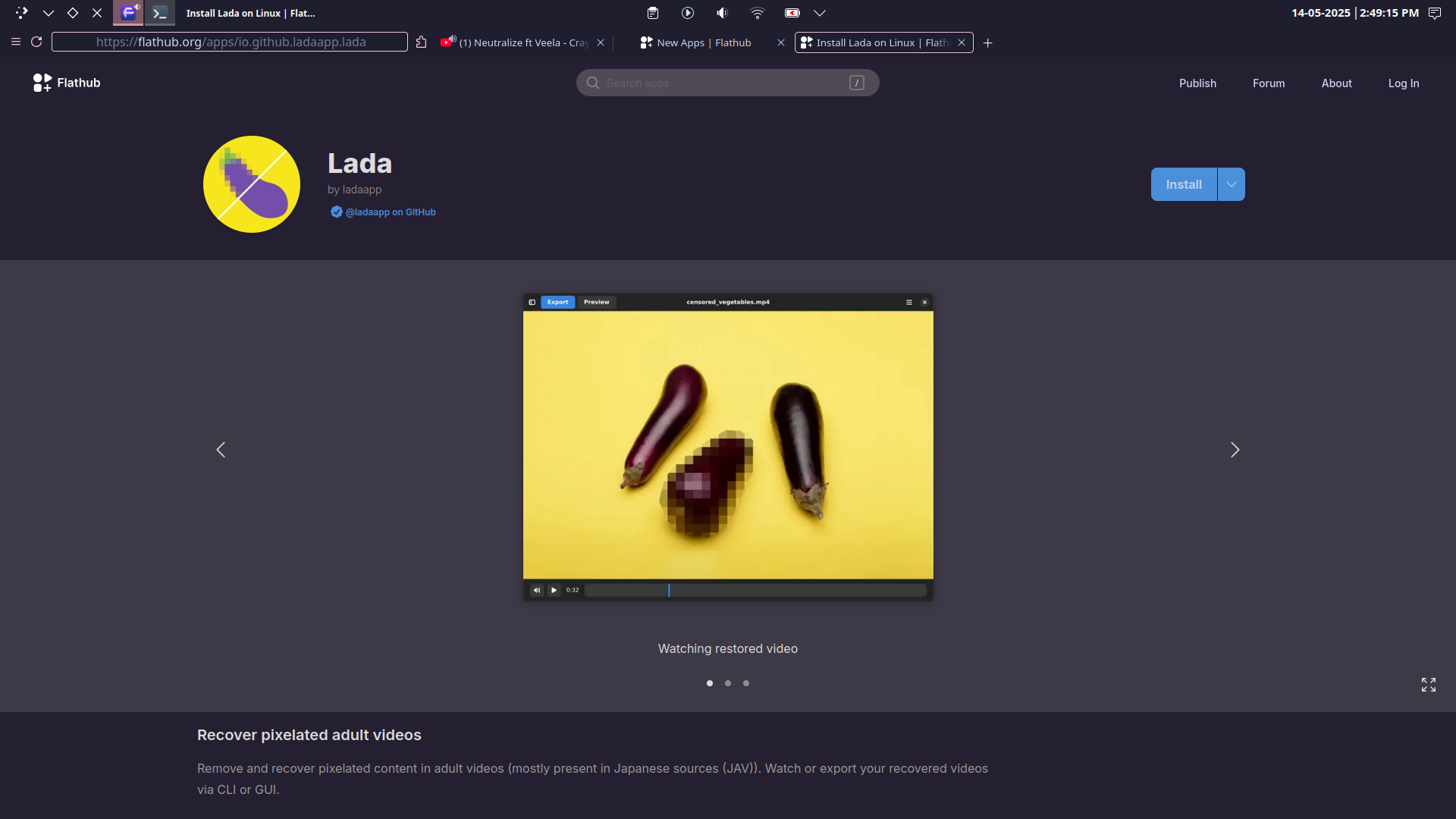

https://github.com/ladaapp/lada

I have a (rather different!) application that's released as a Flatpak, and GPU acceleration is CUDA-only there, too. It supports ROCm when compiled locally, but ROCm just can't work through the sandbox at this point, unfortunately. Not for lack of trying.

If you have an example of a Flatpak where it does work, I'd love to see their manifest so I can learn from it.

@PlantPowerPhysicist @HappyFrog f(l)atpak is workaround, not solution. It should not work in non-default configuration

For the prepackaged flathub or docker installations.

Great news, you can run this software with an AMD GPU fine, just follow the installation instructions in your link and install pytorch for ROCm. https://rocm.docs.amd.com/projects/install-on-linux/en/latest/install/3rd-party/pytorch-install.html

That's pretty much the standard for a lot of video processing applications

I did buy a (secondhand) nvidia card specifically for AI worlkloads because yes, I realised that this is what the AI dev community has settled on, and if I try to avoid nvidia I will be making life very hard for myself.

But that doesn't change the fact that it still absolutely sucks that nvidia have this dominance in the space, and that it is largely due to what tooling the community has decided to use, rather than any unique hardware capability which nvidia have.

Huh? I can't speak to "video processing", but "NVIDIA dominance" isn't applicable at all for AI, at least generative AI. Pretty much all frameworks for LLM either officially support AMD or have an AMD fork. And literally all image gen frameworks that I am aware of officially support AMD.

I typically see people recommending to buy an AMD GPU over NVIDIA in AI communities...

What AI workloads do you run?

EDIT: Even the app in the OP only supports NVIDIA GPUs for the prepackaged flathub installation. You can run this software with an AMD GPU.