Azure/AWS/other cloud computing services that host these models are absolutely going to continue to make money hand over fist. But if the bottleneck is the infrastructure, then what's the point of paying an entire team of engineers 650K a year each to recreate a model that's qualitatively equivalent to an open-source model?

BigMuffin69

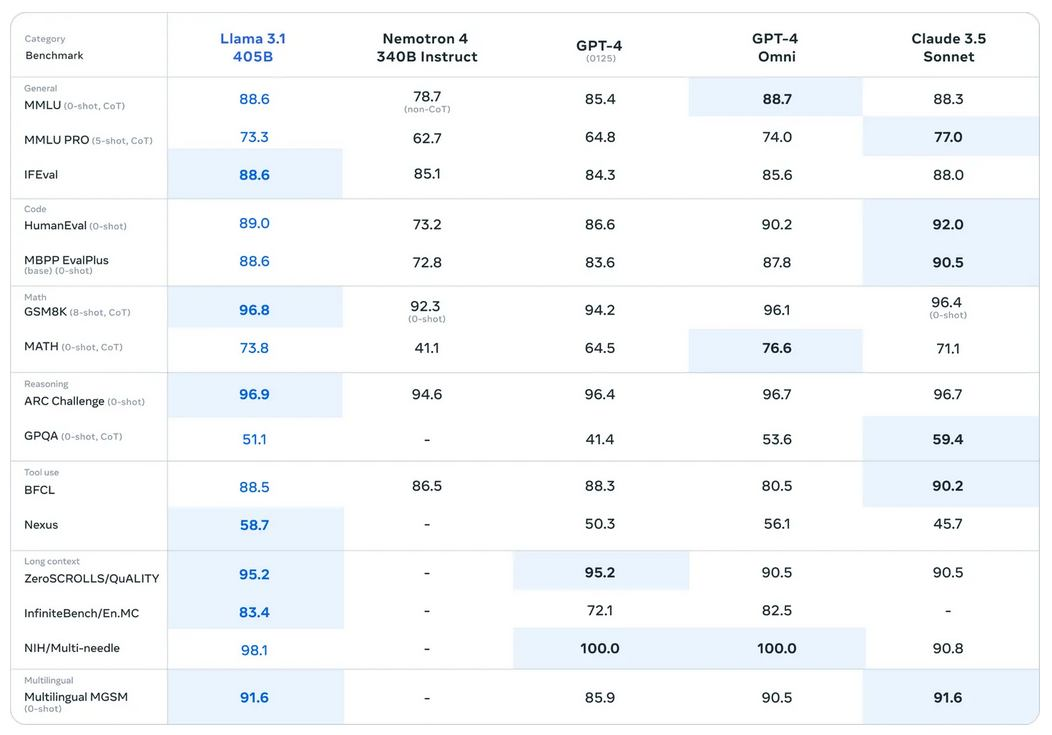

Yann and co. just dropped llama 3.1. Now there's an open source model on par with OAI and Anthropic, so who the hell is going to pay these nutjobs for access to their apis when people can get roughly the same quality for free without the risk of having to give your data to a 3rd party?

These chuckle fucks are cooked.

Humans can’t beat AI at Go, aside from these exploits

kek, reminds me of when I was a wee one and I'd 0 to death chain grab someone in smash bros. The lads would cry and gnash their teeth about how I was only winning b.c. of exploits. My response? Just don't get grabbed. I'd advise "superhuman" Go systems to do the same. Don't want to get cheesed out of a W? Then don't use a strat that's easily countered by monkey brains. And as far as designing an adversarial system to find these 'exploits', who the hell cares? There's no magic barrier between internalized and externalized cognition.

Just get good bruv.

In chess the table base for optimal moves with only 7 pieces takes like ~20 terrabytes to store. And in that DB there are bizzare checkmates that take 100 + moves even with perfect precision- ignoring the 50 move rule. I wonder if the reason these adversarial strats exists is because whatever the policy network/value network learns is way, way smaller than the minimum size of the "true" position eval function for Go. Thus you'll just invariably get these counter play attacks as compression artifacts.

Sources cited: my ass cheeks

https://www.nature.com/articles/d41586-024-02218-7

Might be slightly off topic, but interesting result using adversarial strategies against RL trained Go machines.

Quote: Humans able use the adversarial bots’ tactics to beat expert Go AI systems, does it still make sense to call those systems superhuman? “It’s a great question I definitely wrestled with,” Gleave says. “We’ve started saying ‘typically superhuman’.” David Wu, a computer scientist in New York City who first developed KataGo, says strong Go AIs are “superhuman on average” but not “superhuman in the worst cases”.

Me thinks the AI bros jumped the gun a little too early declaring victory on this one.

Pedro Domingos tries tilting at the doomers

The doom prediction in question? Dec 31st 2024. It's been an honour serving with you lads. 🫡

Edit: as a super forecastor, my P(Connor will shut the fuck up due to being catastrophically wrong | I wake up on Jan 1st with a pounding hang over) = (1/10)^100

Every day I become more convinced that this acct is an elaborate psyop being run by Yann LeCun to discredit doomers. Nobody could be this gullible IRL, right?

What is it about growing up in insular fundamentalist communities that drives peeps straight into the basilisk's scaly embrace?

Dan Hendrycks wants us all to know it's imperative his AI kill switch bill is passed- after all, the cosmos are at stake here!

https://xcancel.com/DrTechlash/status/1805448100712267960#m

Super weird that despite receiving 20 million dollars in funding from SBF & co. and not being able to shut the fuck up about 10^^^10 future human lives the moment he goes on a podcast, Danny boy insists that any allegations that he is lobbying on behalf of the EAs are simply preposterous.

Now please hand over your gpus uwu, it’s for your safety 🤗 we don’t allow people to have fissile material, so why would we allow them to multiply matrices?

ChatGPT's reaction each morning when I tell it that it's now the year 2024 and Ilya no longer works at OAI

the wife sent this one to me