Thanks!

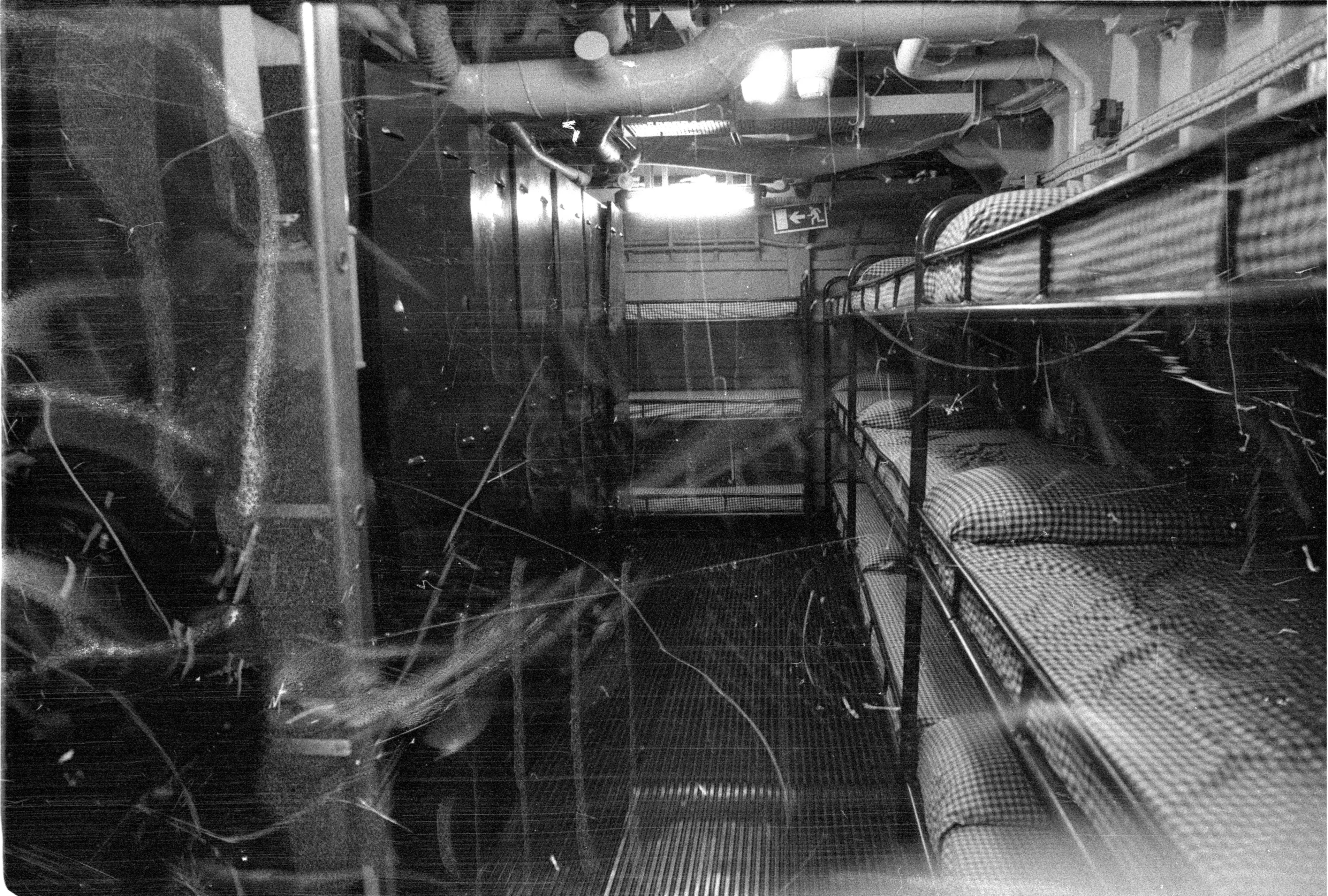

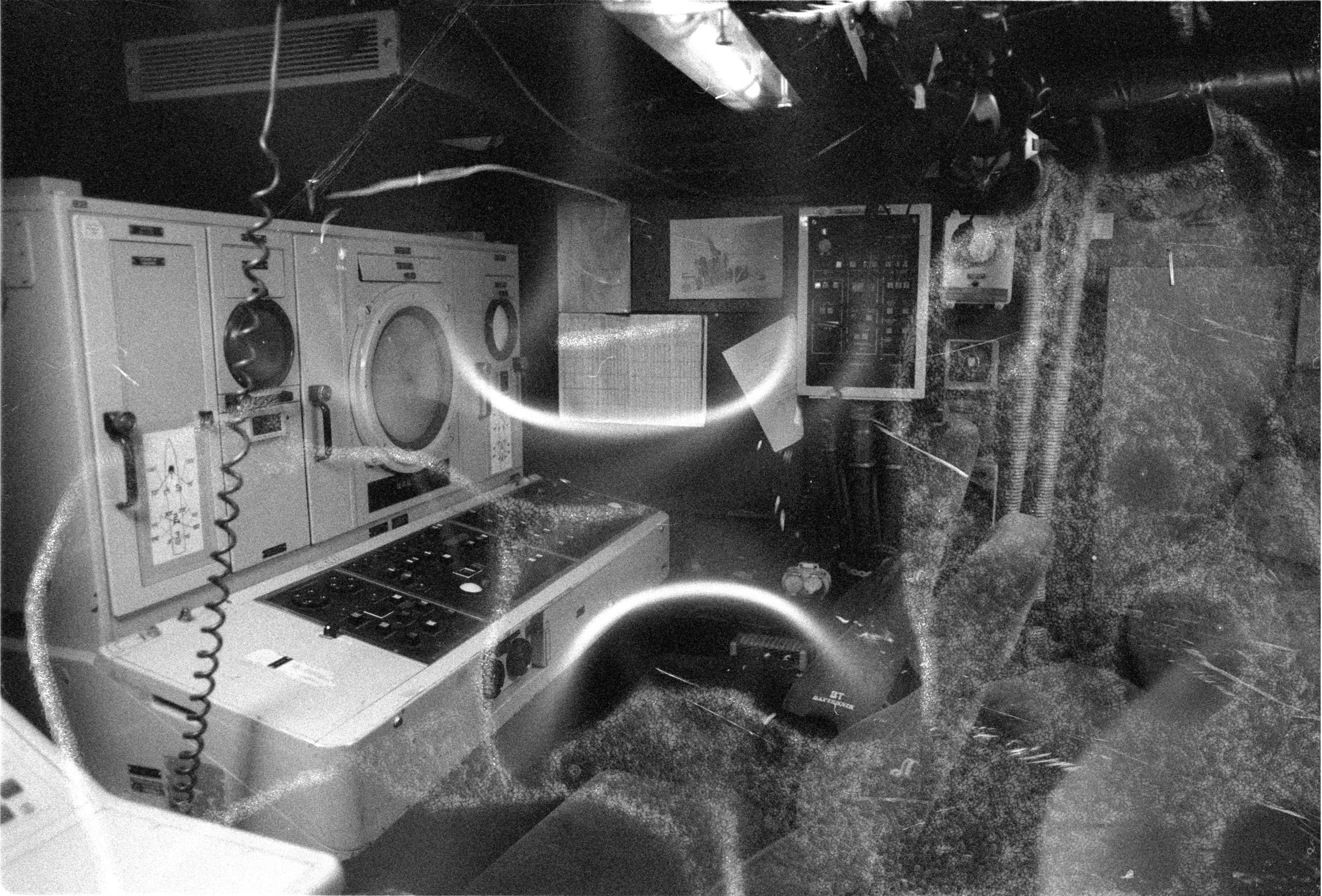

Development did go just fine, the two issues were a fat roll (light leaked in through the ends a bit as can be seen in the right side) and scanning.

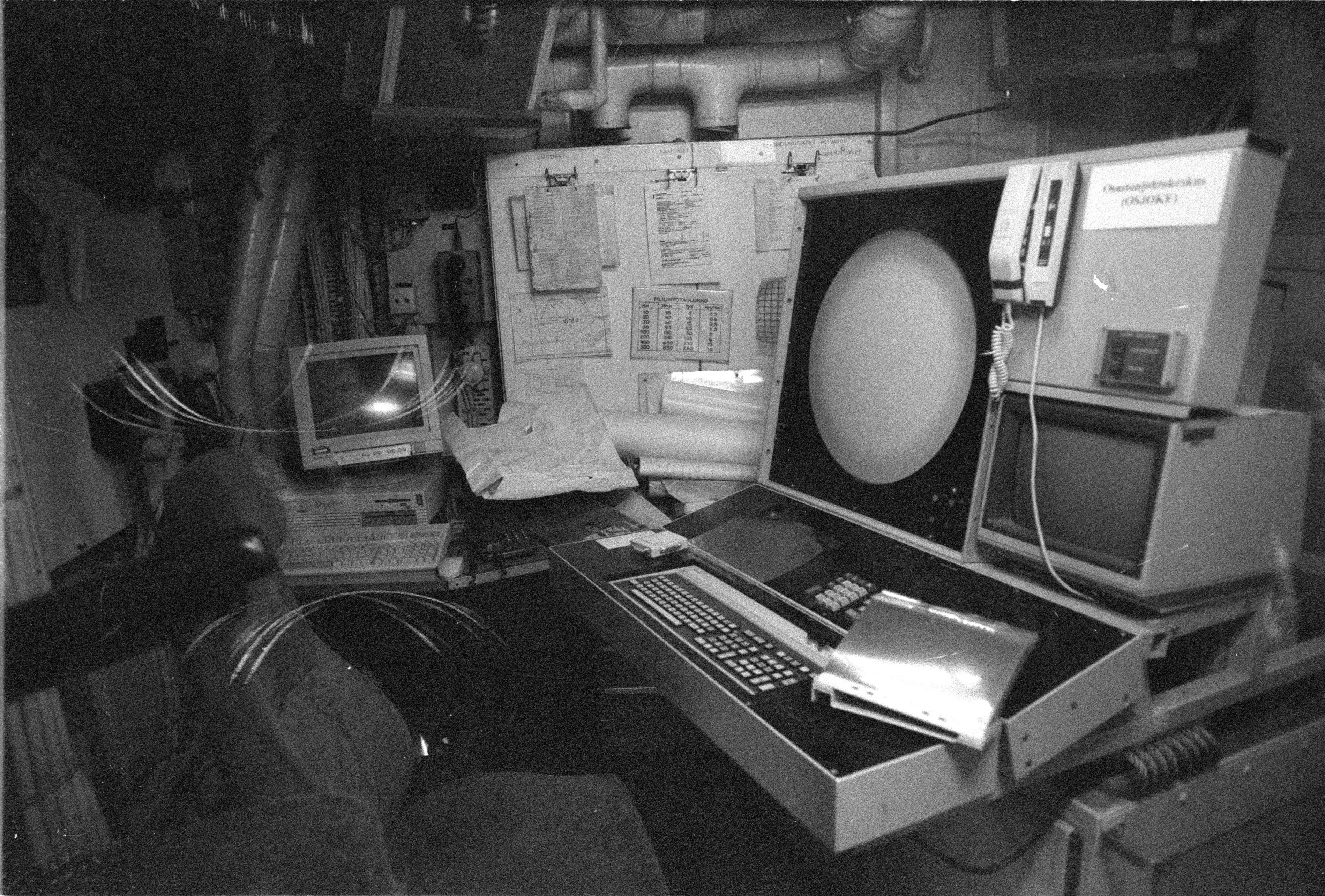

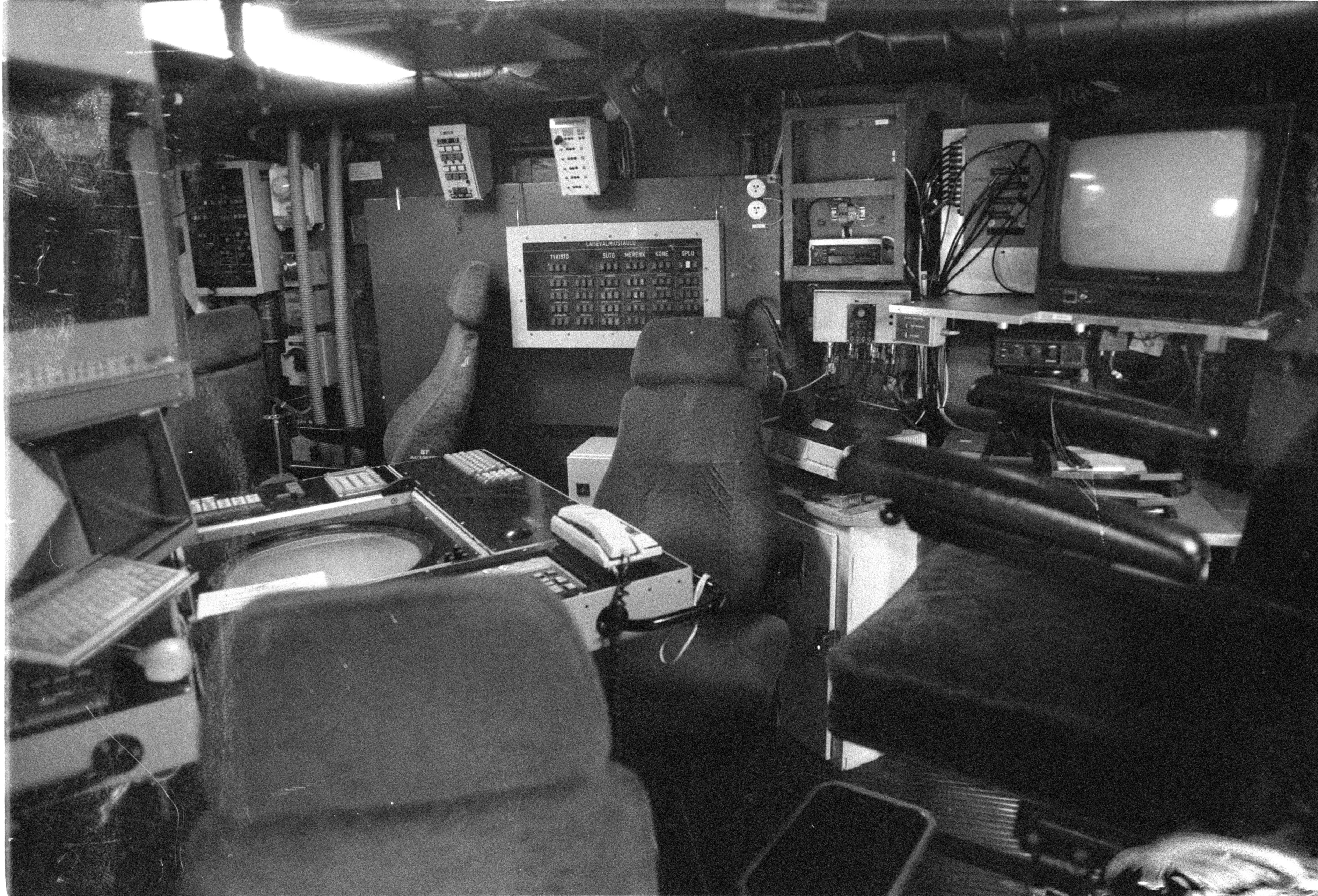

I've been experimenting with trying to get rid of the newton rings while flatbed scanning. This time I didn't get newton rings thanks to an anti-glare glass bought from goodwill. The finish on the glass is so coarse, though, that the texture is very visible in the scans. Will have to see how bad it is with color film as well.

I'll probably build a custom scanner for 120 at some point, but now I'll just have to try to make this work. I've been planning on trying to build a drum scanner using APDs and some readily available ADC to see how much dynamic range I can get out of them.

Infuriatingly that would omit things like unit test runners from the history in case they don't pass. As a developer I tend to re-run failed commands quite often, not sure how widely that applies, though.