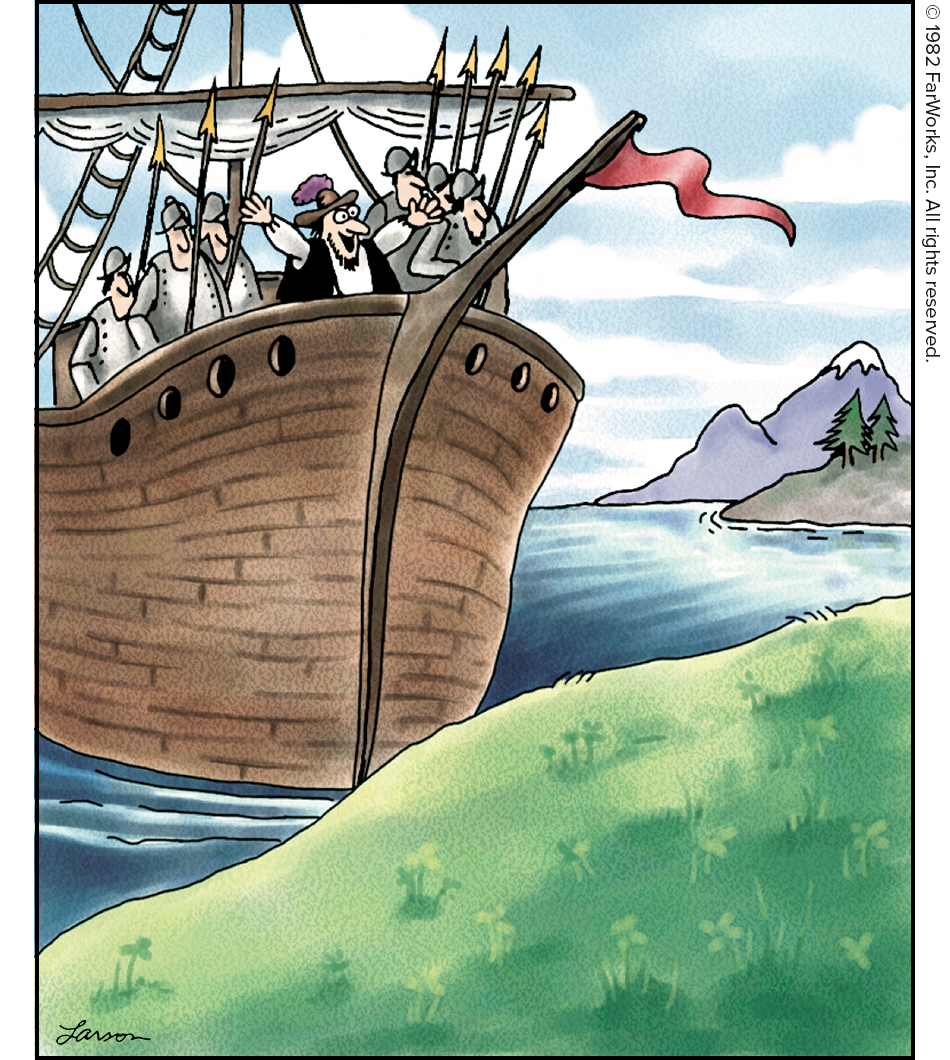

The only true part is they are alone.

sugar_in_your_tea

Don't need arms to make babies...

I, too, like Dutch people.

Ok, so it's effectively the same as P2P, just with some guarantees about how many copies you have.

In a P2P setup, your data would be distributed based on some mathematical formula such that it's statistically very unlikely that your data would be lost given N clients disconnect from the network. The larger the network, the more likely your data is to stick around. So think of bittorrent, but you are randomly selected to seed some number of files, in addition to files you explicitly opt into.

The risk w/ something like Nostr is if a lot of people pick the same relays, and those relays go down. With the P2P setup I described, data would be distributed according to a mathematical formula, not human decision, so you're more likely to still have access to that data even if a whole country shuts off its internet or something.

Either solution is better than Lemmy/Mastodon or centralized services in terms of surviving something like AWS going down.

Simplex is ready today, assuming you just want 1:1 messaging.

How sure are you? Assign a percentage chance to it and the cost of exposing old messages, and compare that to the cost of this dev effort.

We know governments are using it, and there's likely a lot of sensitive data transmitted through Signal, so the cost of it happening in the next 20 years would still be substantial, so even if the chance of that timeline happening is small, there's still value in investing in forward secrecy.

Monero isn't like the other three, it's P2P with no single points of failure.

I haven't looked too closely at Nostr, but I'm assuming it's typically federated with relays acting like Lemmy/Mastodon instances in terms of data storage (it's a protocol, so I suppose posts could be local and switching relays is easy). If your instance goes down, you're just as screwed as you would be with a centralized service, because Lemmy and Mastodon are centralized services that share data. If your instance doesn't go down but a major one does, your experience will be significantly degraded.

The only way to really solve this problem is with P2P services, like Monero, or to have sufficient diversity in your infrastructure that a single major failure doesn't kill the service. P2P is easy for something like a currency, but much more difficult for social media where you expect some amount of moderation, and redundancy is expensive and also complex.

Yup, I remember reading the manual on the toilet while my sibling was playing so when it was my turn, I'd have a leg up. We would take turns, cheering each other on as we got past a difficult part, and sharing secrets that we found.

With the internet, I can just look up a walkthrough pretty soon after the game launches, so I have no reason to look at the manual (if there is one) or talk to anyone else.

I think that's why competitive MP has taken off. People want that social experience, and that's filling in for what used to exist. I remember PvP being a thing, but I also remember helping each other out on a SP game being a thing, so both were social activities (if it wasn't a sibling, it was a friend or coworker).

Unit tests aren't intended to find bugs, they're intended to prove correctness. There should be a separate QA process for finding bugs, which involves integration testing. When QA inevitably finds a bug, the unit tests get updated with that case (and any similar cases).

only cover cases that you know will work

And that's what code reviews are for. If your tests don't sufficiently cover the logic, the change should be rejected until they do. It's a lot easier to verify the tests cover the logic if the tests are submitted w/ the logic changes.

I did it in a few weeks. I basically swapped discs while playing games, before going to work, before bed, etc. It was tedious, but I got them all.

Now when I buy one, I'll rip it first before watching.

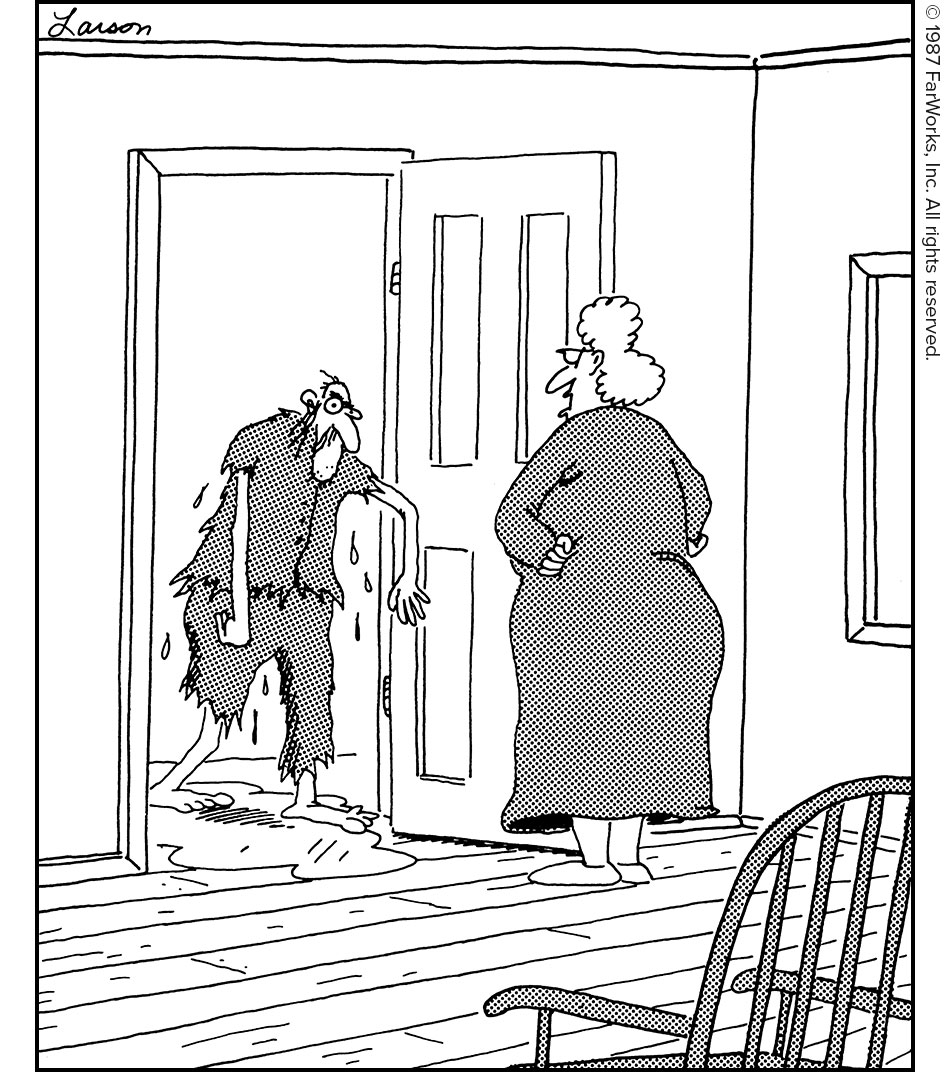

Yup, my SO isn't very technically inclined, so describing anything I do in simple terms makes it sound super lame.

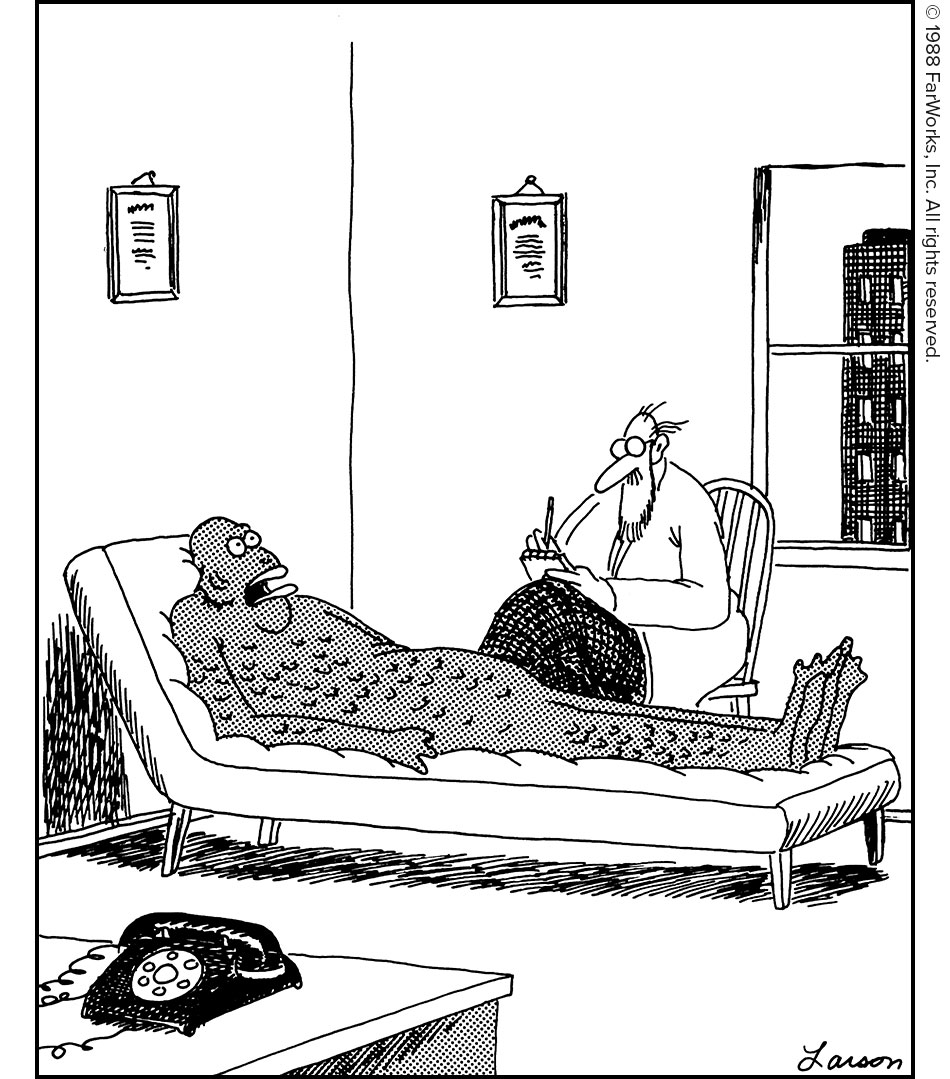

For example, I'm working on a P2P reddit clone, and here's how it goes:

Me: It's like reddit, but there's no website and it's all on your computer.

SO: So a note pad?

Me: No, it's also on other people's computers too, anything I wrote shows up instantly on their computer.

SO: Notepad with Dropbox?

Me: No, I don't have all of the data, and neither does anyone else. We only get what we subscribe to. Like reddit!

SO: So Dropbox, but with multiple documents.

Me: Sure...

SO: (pats me on the head) I'm glad you're excited about it, now do the dishes.

That's ridiculous. The app should merely talk to the device over wifi, if available. The cloud should only be used to connect from outside the wifi network.

Why is everything so crappy?